This post is part of a series on Mohammad Anwar’s excellent Weekly Challenge, where hackers submit solutions in Perl, Raku, or any other language, to two different challenges every week. (It’s a lot of fun, if you’re into that sort of thing.)

This week’s tasks have a little bit more meat on their bones, which I quite enjoyed. They are user-submitted, as well, and it’s always fun to see what people come up with when submitting tasks.

Task 1 – Banking Day Offset

This task comes from the mind of Lee Johnson. Here, we’re given the following inputs:

- Number of days [

$offset] - Start date [

$start_date] - List of dates which are holidays (optional) [

@holidays]

From that, we’re supposed to return the date that is $offset working days from the $start_date, ignoring weekends and @holidays. This is straightforward. I opted to use the core Perl module Time::Piece to get the day of the week. There are about n + 1 different ways to do that, though.

The function starts with some initialization:

sub bank_holiday_ofs {

my ($start_date, $offset, @holidays) = @_;

my $t = Time::Piece->strptime($start_date => $date_fmt) - 86400;

my %holiday = map { $_ => 1 } @holidays;

$offset++; # Account for today

The %holiday hash simply maps the dates to a true value so $holiday{$date} will be true iff $date is in @holidays. The $offset variable gets an extra kick to account for the current day.

From here, we loop until $offset is zero:

while ($offset) {

$t += 86400; # Advance day

$offset-- unless $t->wday == 1 or $t->wday == 7

or $holiday{ $t->strftime($date_fmt) };

}

From years of frequent use, 86,400 has sufficient semantic meaning as “1 day” that I’m not bothered about the naughty magic number. If that’s not your style, Time::Seconds has a ONE_DAY constant.

The loop is simple. Add a day, and then subtract a day from $offset unless it’s a weekend or holiday.

Task 2 – Line Parser

The second task, from Gabor Szabo, gives us a particular text record format that we must parse into a Perl hash. For example:

{% id field1="value1" field2="value2" field3=42 %}

Should become:

{

name => id,

fields => {

field1 => value1,

field2 => value2,

field3 => value3,

}

}

We are also required to handle escaped quotes (\") and (optionally) multiline tags. I wrote a pure-Perl parser that handles all of this. It’s easiest to think of in two parts:

Top level line parser

This is the part that takes in a line of text and decides what to do with it. First, let’s define our $Open and $Close tags:

my ($O, $C) = (qr/^\s*\{\%\s*/, qr/\s*\%\}\s*$/); # Tokens gobble whitespace

I could have just done a simple {% and %} set, but I wanted to allow optional whitespace. A recurring theme you’ll see with my solution (and my parsers in general) is that I tend to be permissive with inputs, but precise with outputs.

Now I loop through each line of input (in this case, the __DATA__ block):

for (<DATA>) {

chomp;

if ($id) {

if (/${O}end$id${C}/) {

$id{$id}{text} = @text > 1 ? [ @text ] : $text[0];

@text = ();

$id = undef;

} else {

push @text, $_

}

}

elsif (/${O}(?<id>\w+)\s+(?<fields>.+?)${C}/) {

die "No end token found for <$id>" if $id and @text;

$id = $+{id};

die "Duplicate id <$id>" if exists $id{$id};

$id{$id} = { name => $id, fields => parse_fields($+{fields}) };

}

else {

die "Invalid line: <$_>";

}

}

The way this loop works is, if $id is defined, we’ve already seen an open {% tag and we’re expecting either a line of text, or the {% endid %} closing tag, so we look for those and handle them accordingly.

Otherwise, we expect to see a single line {% id key=value, ... %} record or the start of a multi-line record, so we look for that. We pass the key/value portion of the record to the parse_fields() sub, which we’ll look at next.

parse_fields(): The key/value (kv) parser

It might seem like parsing keys and values would be the easy part, and if not for Gabor’s requirement to handle escaped quotes, it might have been. There are several ways I could have tackled this, from tricky eval()s to full-blown grammars, but for my purposes here (and because I felt like it), I decided to implement a simple state machine.

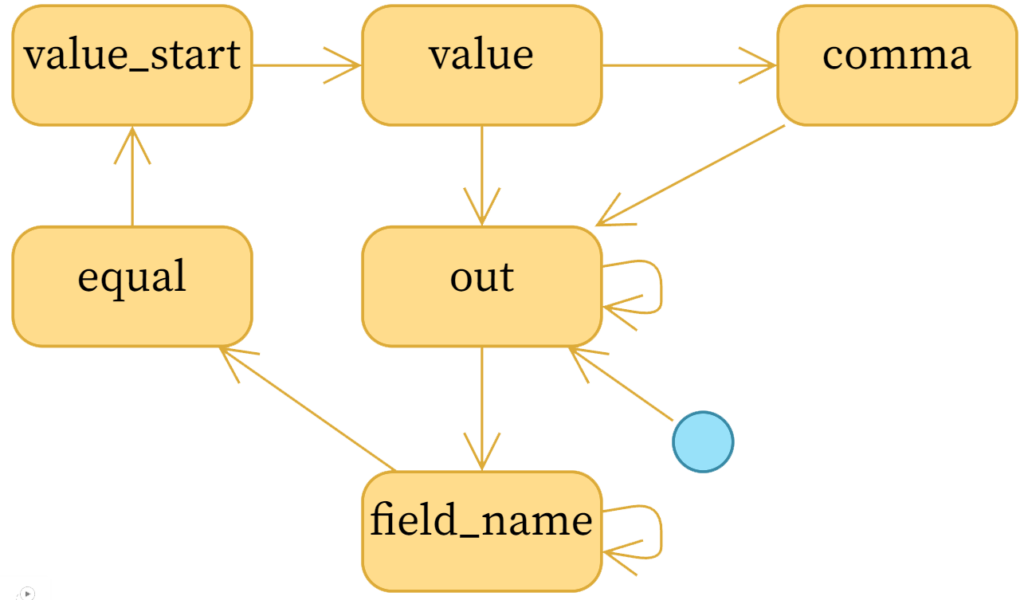

Finite state machines can be described by a directed graph whose vertices contain the possible states the system can be in, and edges are the possible state transitions. To parse the kv pairs as described by this task, the following state machine will do the trick:

Note: To avoid a cluttered diagram, I’ve omitted arrows on most states that point to themselves, except for field_name and out. It so happens that every state in this particular system can have itself as the next state.

We effectively start from the out state, meaning we are outside of a key/value pair and are waiting for the start of the next key name. Once we see a word character (\w), we go to the field_name state and stay there until we’ve gobbled up all of the \w characters, and then we look for an equal sign. We similarly trundle through the states to find the start of the value (value_start), the value itself, then a comma, and back to out for the next field. We can stop at any time.

One way to implement simple state machines is by simply having a $state variable that you set to the name of the state you’re in, and an if ... elsif ... chain to handle the state transitions. For more complicated, or dynamic state machines, you might reach for one of the many CPAN modules for finite state machines (FSM). But I wanted to show you how it can be done without any help.

My parse_fields() function iterates over the input string character by character. First, we have some top level variables to keep track of:

my %fields;

my $state = 'out'; # Outside of KV pair

my $backslash = 0; # Substate for whether we're backslashed

my $name = undef; # Field name

my $value = undef; # Field value

my $expected_closing_quote; # If defined, value must end with this

Our output will be %fields. The $backslash variable actually captures a parallel state of whether the last character was a backslash (1), whether the current character was escaped (2), or whether neither of those things is true (0). I might have used named values instead, but this made sufficient sense to me.

I decided values could be optionally quoted, by single ('), double (") or nothing, but that the closing quote had to match the opening quote. So that’s what $expected_closing_quote keeps track of.

Now, here’s how we handle backslashes:

$backslash = 0 if $backslash == 2;

if ($backslash) {

$_ = eval "\$_"; # safe

$backslash = 2;

} elsif (/\\/) {

$backslash = 1;

next;

}

I do use eval here, but you’ll note it’s done in a safe manner, as I only ever pass two characters to eval, and the first character is a backslash. I could have built up my own hash of slash characters, but that would be error prone and require updating with the language.

If the current character is a backslash, we just set $backslash and go to the next character. If the previous character was a backslash, we do the eval to get the unescaped character. We then set $backslash to 2, which is used when we’re looking for quotes, to avoid ending the value on an escaped quote.

Here’s what a typical state handler looks like:

# Handle the value, with optional quotes and escape sequences

elsif ($state eq 'value_start') {

next if /\s/;

if (/['"]/ and not $backslash) {

$expected_closing_quote = $_;

$state = 'value';

$value = '';

next;

}

$value = $_;

$state = 'value';

}

That is the value state. You’ll see that has the logic I just talked about; if we see our $expected_closing_quote and it wasn’t $backslashed, or we see whitespace and the value is not quoted at all, we have reached the end of the value. So we set $fields{$name} = $value (that’s now part of our return value), set our next state to comma, and go to the next character.

On the other hand, if none of those conditions were true, that means we’re still inside the value, so we append the character to $value and continue. (The value state points to itself.)

I won’t show all of the states here, as the above should give you the flavor of it, but of course you can see my full solution here.

All in all, it’s decently robust for a PWC task solution, but a production version could certainly use some better error checking and reporting. Invalid inputs are mostly handled fairly gracefully, but the resulting output might be confusing.